|

|

|

|

|

|

|

|

|

|

|

|

Code [GitHub] |

Paper [arXiv] |

Cite [BibTeX] |

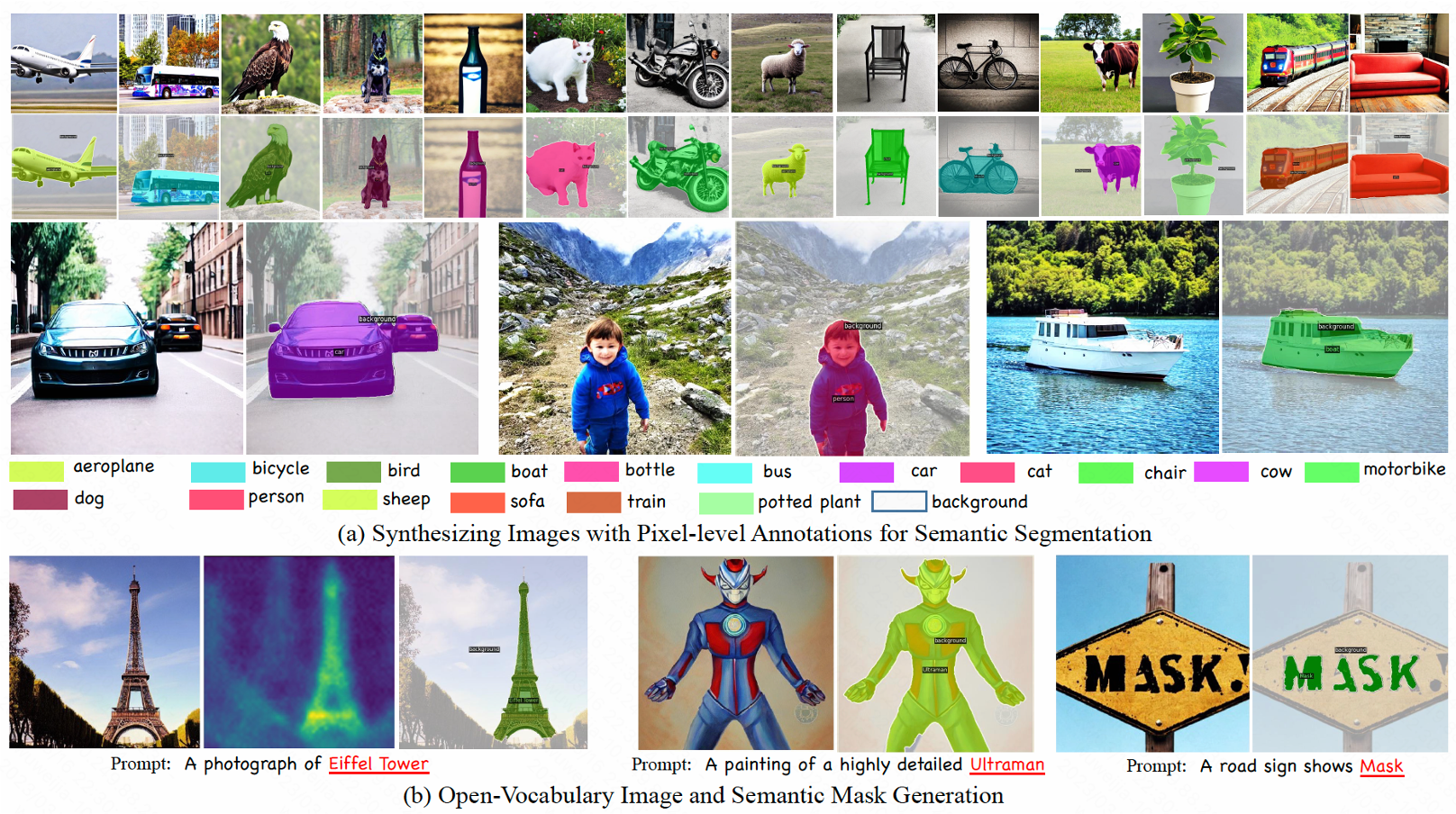

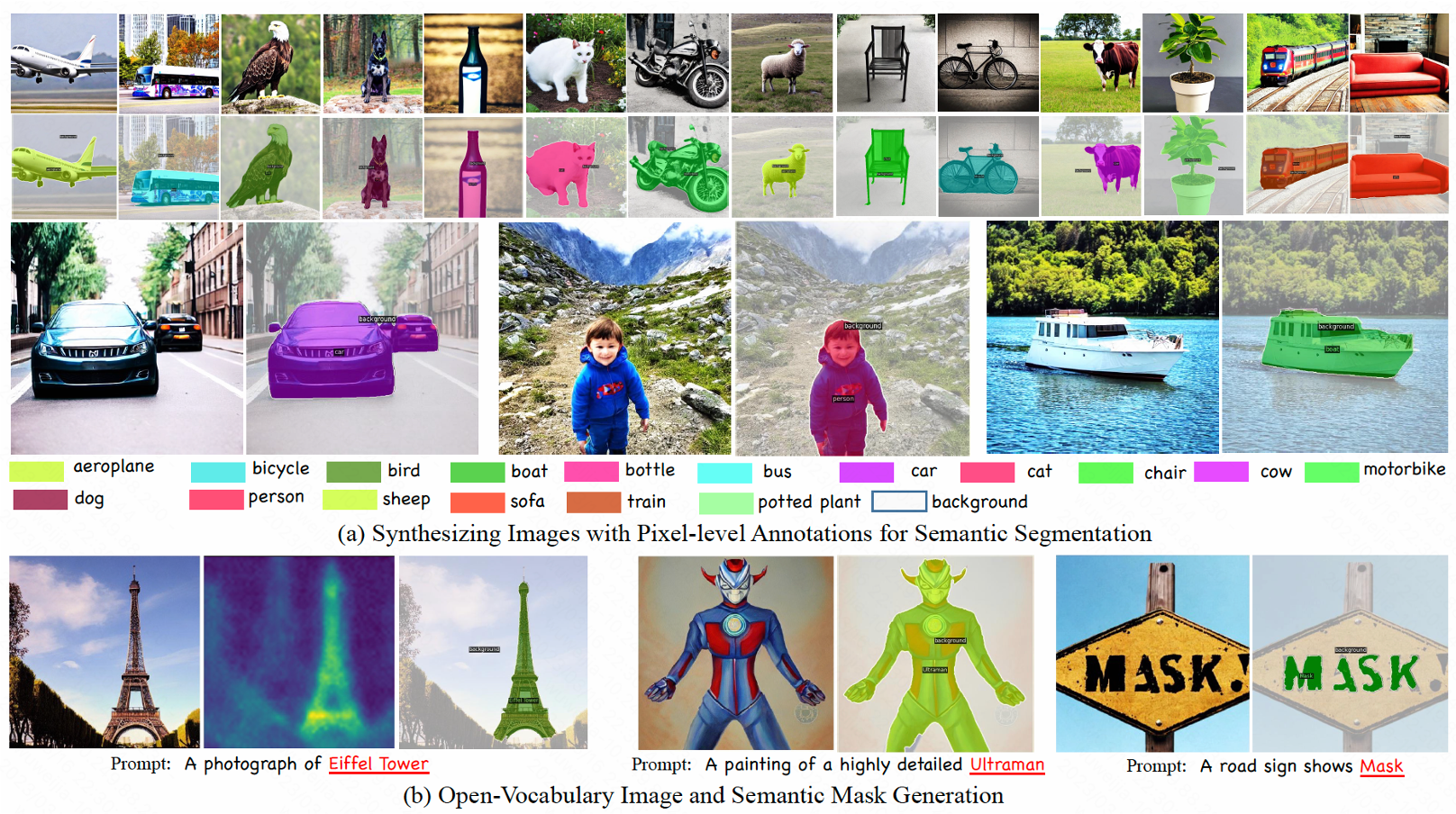

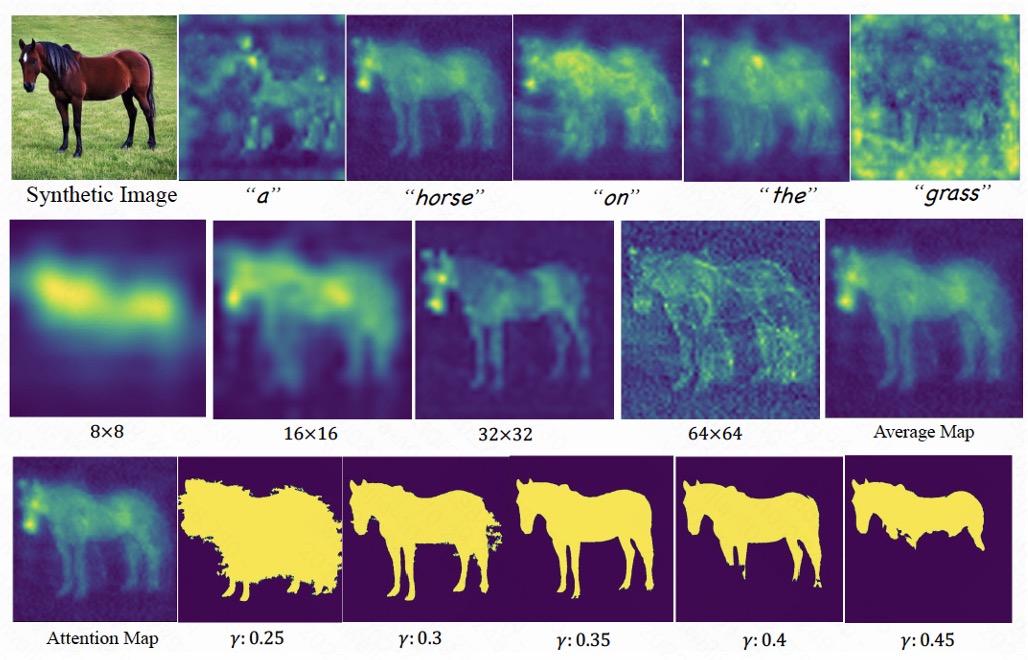

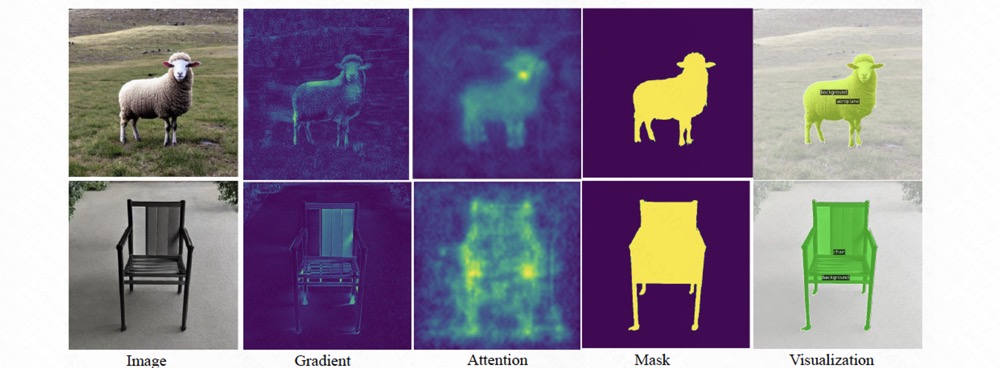

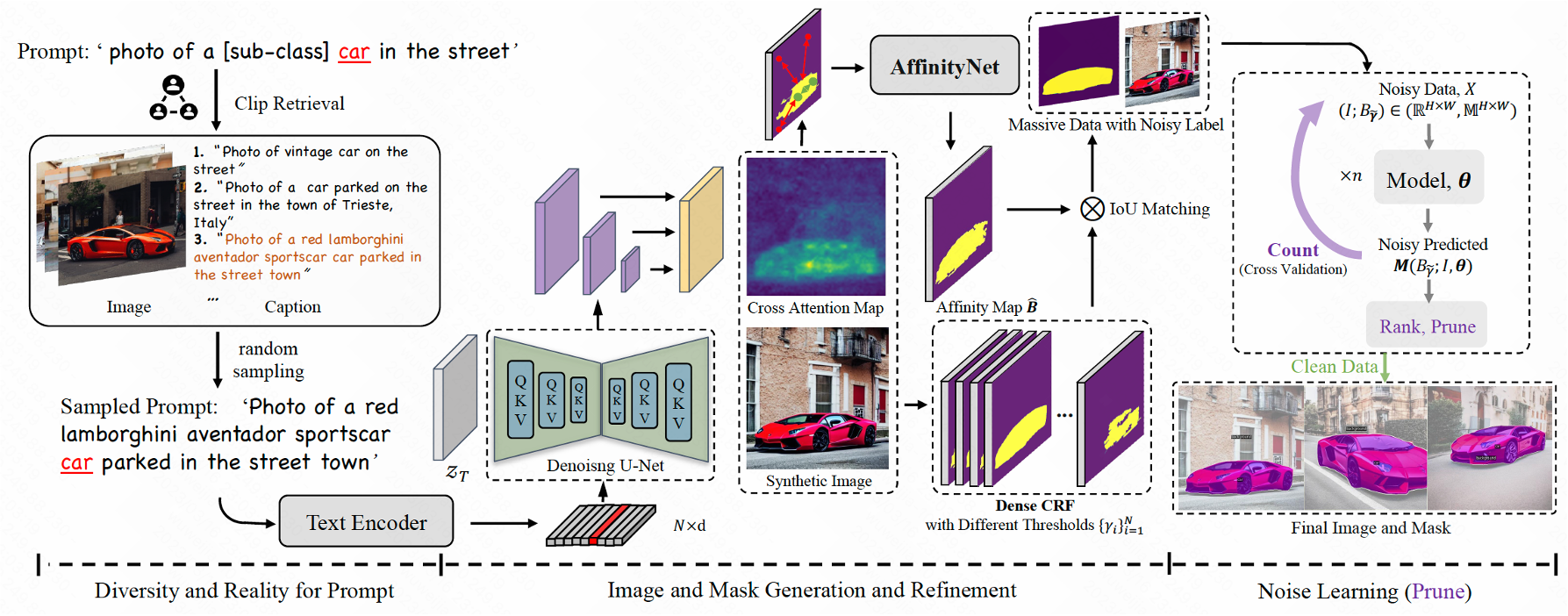

A ‘good’ mask annotation satisfy two conditions: 1) class-discriminative. 2) high-resolution, precise mask. The average map shows the possibility for us to use for semantic segmentation, where it is class-discriminative and fine-grained.

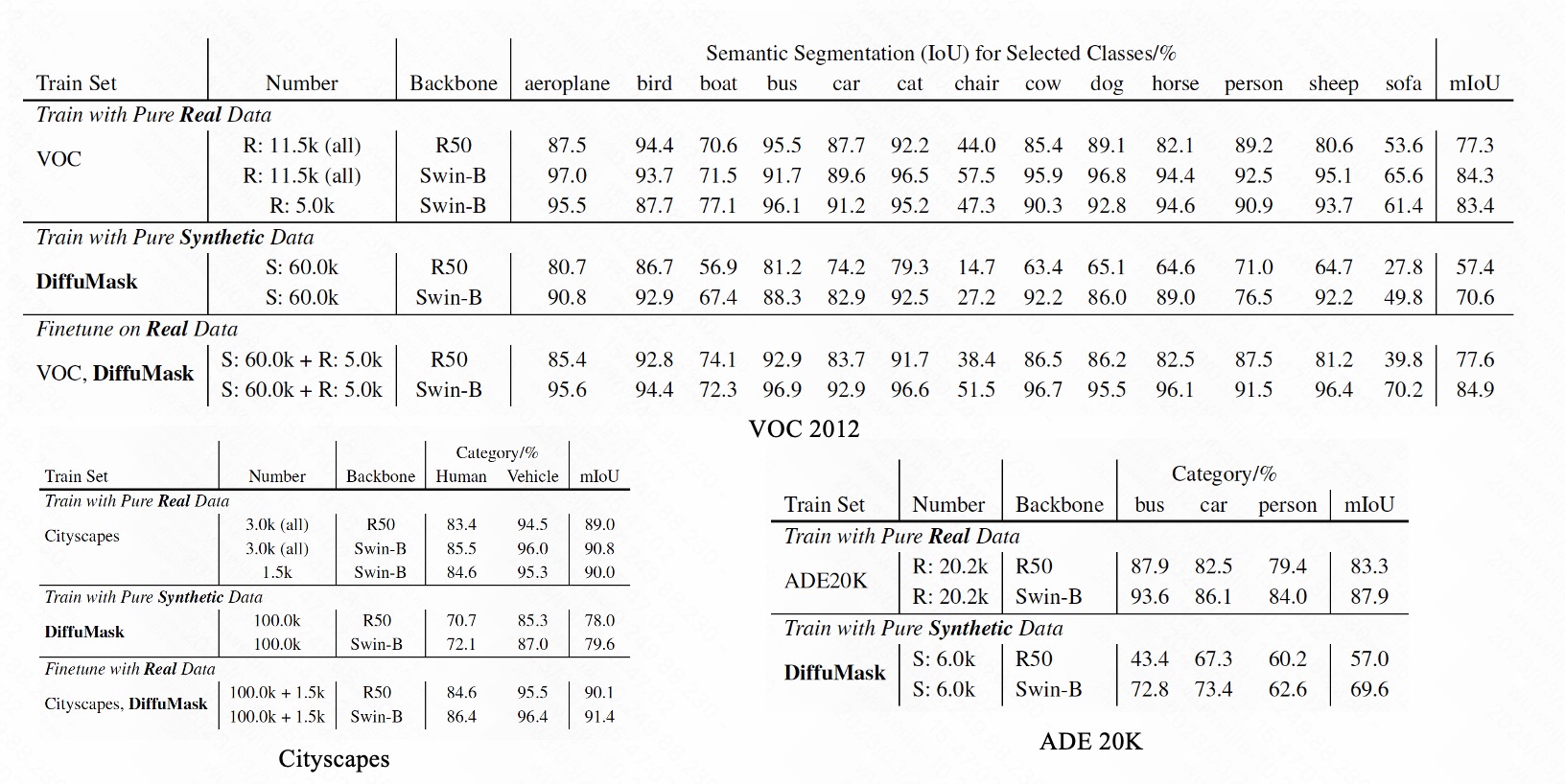

Quantitative result for Protocol-I evaluation on Semantic Segmentation

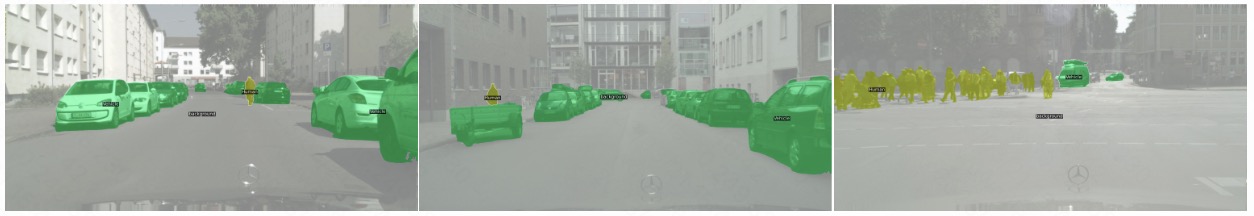

Qualitative Results

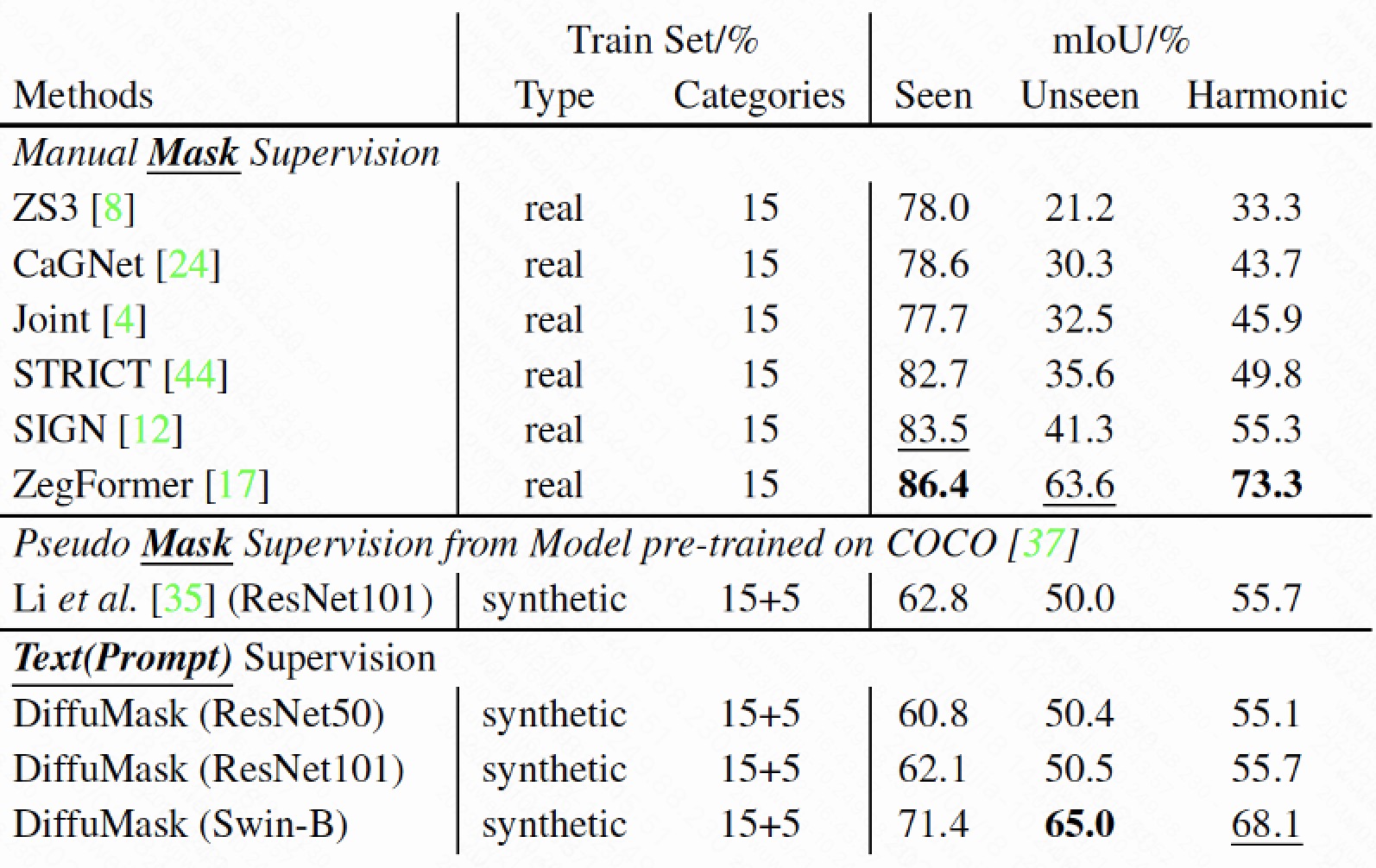

Comparison with the previous ZS3 methods on PASCAL VOC.

The “Seen”, “Unseen”, and “Harmonic” denote mIoU of seen categories, unseen categories, and their harmonic mean. These ZS3 methods are trained on PASCAL-VOC training set.

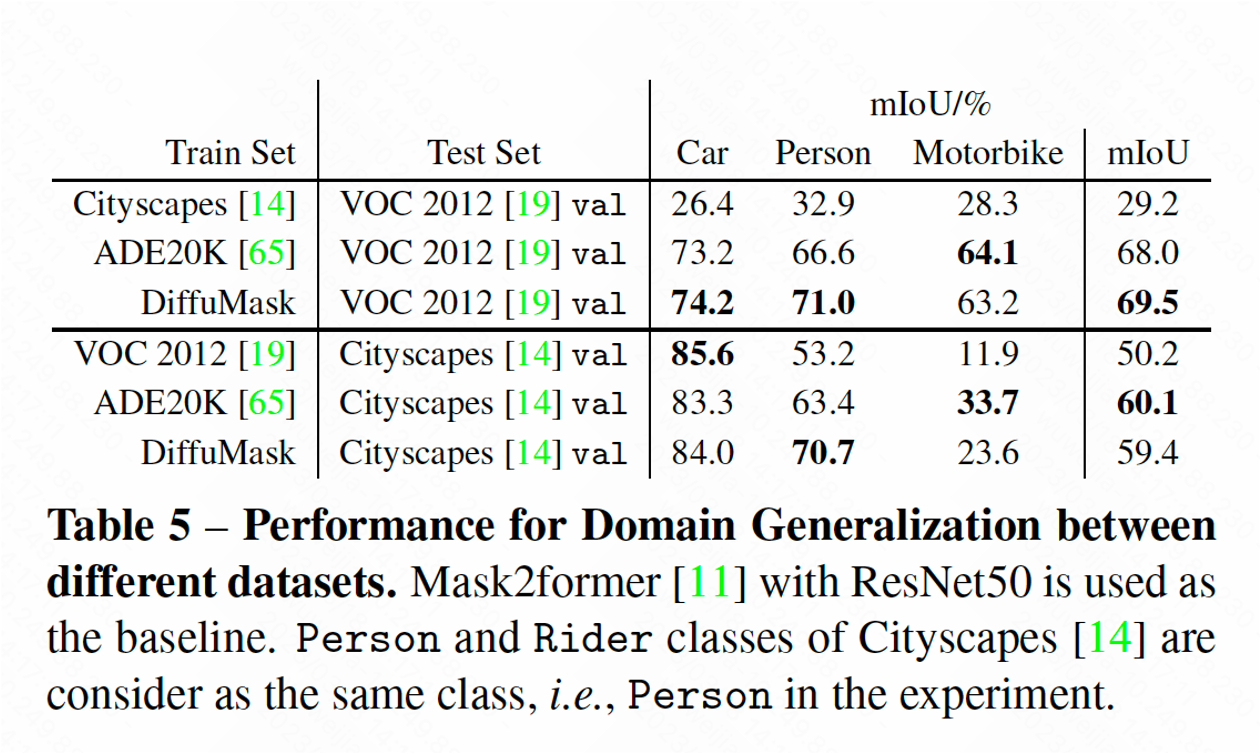

Performance for Domain Generalization between different datasets.

The Table presents the results for cross-dataset validation, which can evaluate the generalization of data. Compared with real data, DiffuMask show powerful effectiveness on domain generalization, e.g., 69.5% with DiffuMask v.s 68.0 with ADE20K on VOC 2012 val.

Based on a template by Ziyi Li and Richard Zhang.